AI Governance

AI models are increasingly used for predictive analytics, integrating further into our daily lives. The need for high-quality, trustworthy, and ethically sourced data used in training these models is on the rise. This highlights the importance of good data governance.

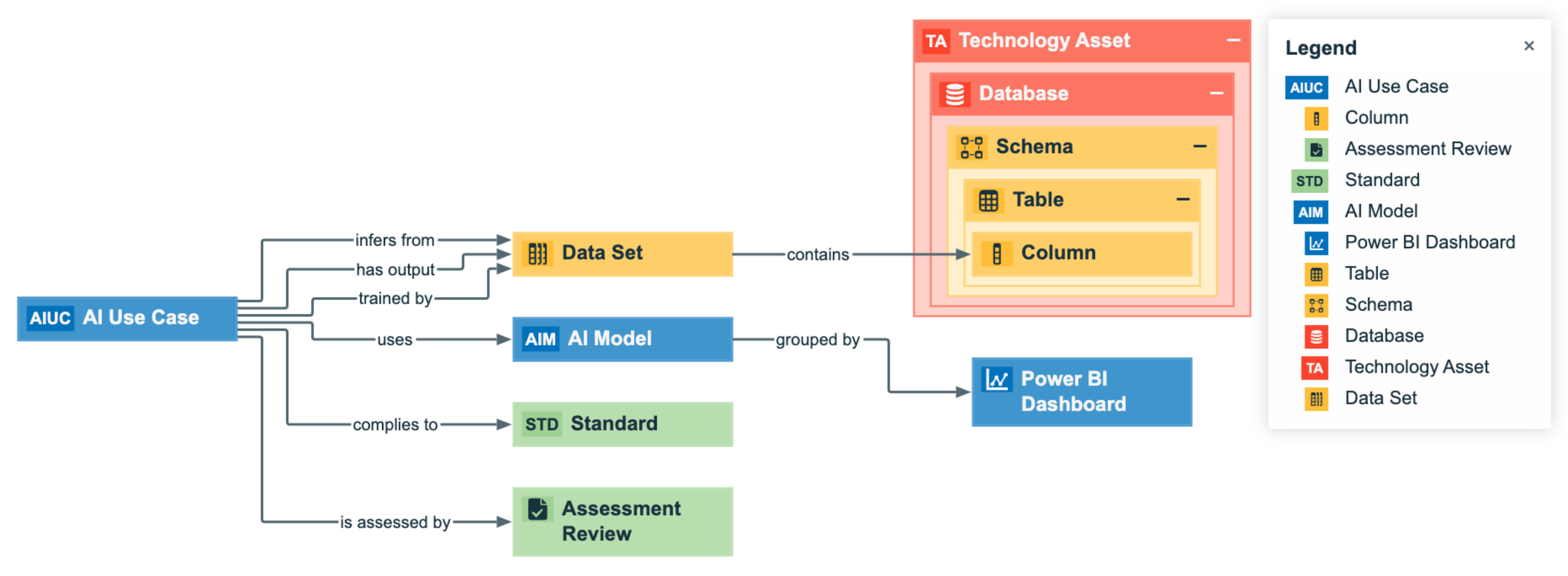

Centralising, organising, and structuring your data to ensure accessibility and compliance with current standards is crucial.

What is AI Governance?

AI governance, simply put, refers to the implementation of rules, processes, and responsibilities to maximise the value of automated data products. In order to make sure that they adhere to ethical practices, mitigate risks, comply with legal requirements, and protect privacy.

Our services

Impact Assessment

Gain insight into the potential social, ethical, and economic consequences.

Strategy

Make sure to to gain value from your AI governance initiatives by laying a good foundation.

Compliance

Ensure compliance with legal regulations and policies such as the AI Act.

Training

Educate stakeholders on ethical guidelines, regulatory frameworks, and best practices.

Blogs on AI Governance

Collibra’s AI Governance: A Chimpan-Z Use Case

How to navigate the challenges & opportunities of AI Governance

Why Frugal AI needs AI Data Governance

Common Questions

AI governance, simply put, refers to the implementation of rules, processes, and responsibilities to maximise the value of automated data products by ensuring they adhere to ethical practices, mitigate risks, comply with legal requirements, and protect privacy.

So, how do you ensure effective AI governance? It starts with collaboration—bringing together policymakers, technologists, ethicists, and stakeholders to shape a collective vision for the responsible use of AI.

It requires robust regulatory frameworks that adapt to the ever-evolving nature of AI technology, striking a balance between fostering innovation and safeguarding against potential harms. And perhaps most importantly, it demands a commitment to ethical principles that prioritise human well-being above all else.

AI governance is essential in ensuring that AI technologies are developed and used ethically, transparently, and accountably. It helps manage risks, ensures legal compliance, builds trust, and fosters global cooperation. Ultimately, it promotes the responsible and beneficial impact of AI on society while minimising potential harms.

Key components of AI governance include ethical guidelines, transparency measures, accountability mechanisms, risk assessment protocols, legal compliance frameworks, and mechanisms for stakeholder engagement and participation.

Interested in our AI Governance services?

Could you use some help with the implementation of AI governance within your organisation? Are you looking for help in setting up your strategy? Or how to make an impact assessment?

Contact us for more information or to request a demo.