What is the AI Act?

Technology is an ever changing topic, and with emerging technologies constantly on the rise, ethical and responsible development not only needs to be considered, but also ensured. The European Union (EU) sees an urgency for these matters, and thus has been creating regulations for both businesses and individuals to comply to. In this spirit, the AI-Act was introduced, which attempts to make sure that artificial intelligence (AI) systems used in the EU are safe, fair and transparent. This article examines the definition of AI according to the AI-Act, how it classifies systems into risk categories, and the consequences of these categories.

The definition of AI according to the AI-Act

To regulate AI effectively, it is essential to first establish a precise definition of what constitutes AI. The definition of AI, according to the AI-Act, initially revolved around the idea of AI systems having specific, well-defined purposes. AI systems were expected to operate within the boundaries of their intended purposes as outlined in their documentation. For instance, one AI system might be designed for separating waste, another for autonomous vehicle control. These systems were task-oriented, and their intended purposes were clearly stated and documented.

The landscape took a turn when models like ChatGPT arrived, challenging the established AI definition of the AI-Act. ChatGPT doesn’t have a specific, predefined purpose. It’s a broad and flexible model that can perform a wide range of tasks. The rapid rise of ChatGPT prompted the European Parliament to quickly amend the AI-Act to address this issue. They recognized that this new breed of AI model, often referred to as “foundation models”, didn’t neatly align with the established understanding of AI systems. Consequently, the legislation needed to adapt to this evolving landscape of AI. Currently, task-oriented models developed for specific purposes and foundation models like ChatGPT are incorporated within the AI-Act.

Unacceptable risk, high risk & low risk

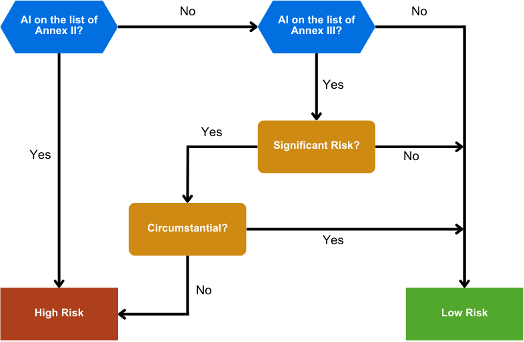

If the AI-Act applies, organisations need to assess what risk category their AI systems fall into. The AI-Act differentiates three levels of risk:

- Unacceptable Risk: This category encompasses AI applications that are considered extremely harmful or pose severe risks to individuals’ safety, fundamental rights, or societal values. AI systems that are designed for social scoring would for instance definitely be classified as unacceptable. All applications deemed to have unacceptable risk are forbidden.

- High risk: Applications in fields specified in Annex II of the AI-Act, such as transportation (trains, airplanes, etc.), are automatically considered high risk. This categorization is largely due to the potential consequences of AI failures in these critical domains, which could pose substantial risks to public safety and well-being.

- Low Risk: Applications that do not meet the criteria of either unacceptable risk or high risk will be classified as low risk.

Distinguishing between these three risk categories is not always straightforward. For example, applications in fields outlined in Annex III, such as insurance or access to education, can be high risk or low risk depending on various factors. In these cases, the classification involves evaluating whether there is a significant risk to fundamental rights and freedoms, and if so, whether that risk is deemed significant.

When the application is found to have the potential to infringe upon fundamental rights to an unacceptable extent, it is classified as high risk. If a significant risk arises because of accidental (circumstantial) or indirect primary use of the AI, the AI application is classified as low risk.

The following chart clarifies this classification process:

What are the consequences of these classifications?

AI deemed to have unacceptable risk is forbidden. When AI is deemed to be low risk, there are minimal transparency requirements. In that case, a company should notify users that they are dealing with an AI system. When AI is classified as high risk the requirements are extensive. Companies should ensure:

- Transparency and (technical) Documentation: Developers must provide detailed documentation about the AI system’s design, development, and functionality. This includes information about the intended purpose, limitations, and potential risks of the AI system.

- Record-Keeping: Developers are required to keep records of all AI system activities, including updates, maintenance, and incidents. These records should be retained for an appropriate period depending on the model.

- Information for users: Users of high-risk AI systems must be provided with clear and easily understandable information about the AI’s capabilities and limitations. They should be aware that they are interacting with an AI system.

- Human Oversight: High-risk AI systems should have mechanisms for human oversight and intervention. Users must be able to take control or override the AI’s decisions, especially in situations where it may pose risks.

Final Remarks on the AI Act

The AI-Act sets the stage for a comprehensive framework for AI governance, addressing various aspects of AI risk, documentation, and transparency. In our next article about the AI-Act we will dive deeper into the ongoing discussions surrounding the AI-Act, the global context in which the AI-Act is developed, and provide key dates regarding the AI-Act.

Want to know more about the AI-Act, or do you need help with governing your AI-models? Don’t hesitate to reach out via email or the contact form on our website!

Frequently asked questions:

The AI Act attempts to make sure that artificial intelligence (AI) systems used in the EU are safe, fair and transparent. This article examines the definition of AI according to the AI-Act, how it classifies systems into risk categories, and the consequences of these categories.

This category encompasses AI applications that are considered extremely harmful or pose severe risks to individuals' safety, fundamental rights, or societal values. AI systems that are designed for social scoring would for instance definitely be classified as unacceptable. All applications deemed to have unacceptable risk are forbidden.

Applications in fields specified in Annex II of the AI-Act, such as transportation (trains, airplanes, etc.), are automatically considered high risk. This categorization is largely due to the potential consequences of AI failures in these critical domains, which could pose substantial risks to public safety and well-being.

Applications that do not meet the criteria of either unacceptable risk or high risk will be classified as low risk.

Companies should ensure among others:

- Transparency and (technical) Documentation

- Record-Keeping

- Information for users

- Human Oversight

This requires good data governance/intelligence